Our software consulting life with Zerrtech has found us designing quite a few React web apps for clients. The first modern JS framework we used to build complex web apps was Angular 1, but now we find it easiest to follow good front-end development design patterns in React.

As a part of creating quality standards for the React apps that we architect, we wanted to collect all of the design best practices from the many React apps we've designed into one place. We gave a presentation on this at the Boise Frontend Development meetup in June 2018. Here are the slides we used in PDF form. We plan to have this document be an evergreen document that evolves with us as our experience grows, so we'll keep it up to date periodically.

Could you use any software help? We would love to bring our experiences designing React apps to your team. Connect with me, Jeremy Zerr, on LinkedIn and let's chat!

React has really gained a lot of traction with frontend web developers. It makes it easy to follow design patterns that have evolved over the years.

React Design Best Practices - Overview

Our Philosophy

Be practical - know the ideal but be realistic.

Our projects involve designing real software for real clients with budgets and timeline constraints. We can serve best when we know the ideal but know what can be sacrificed to meet real world demands.

Don't require devs to remember a bunch of rules.

We all have more important things we need to think about, like solving hard problems in elegant ways. Trying to memorize a bunch of rules is a sign of not using the right tools or systems.

Use tools that encourage education

A developer's intuition about how to code better and follow best practices shouldn't require taking the creativity out of the art of coding. Over time, tools and editors can help make it so that best practices become second nature.

Don't be so set in your ways

Question the process, any list of best practices is rather fluid, some do not fit on every project.

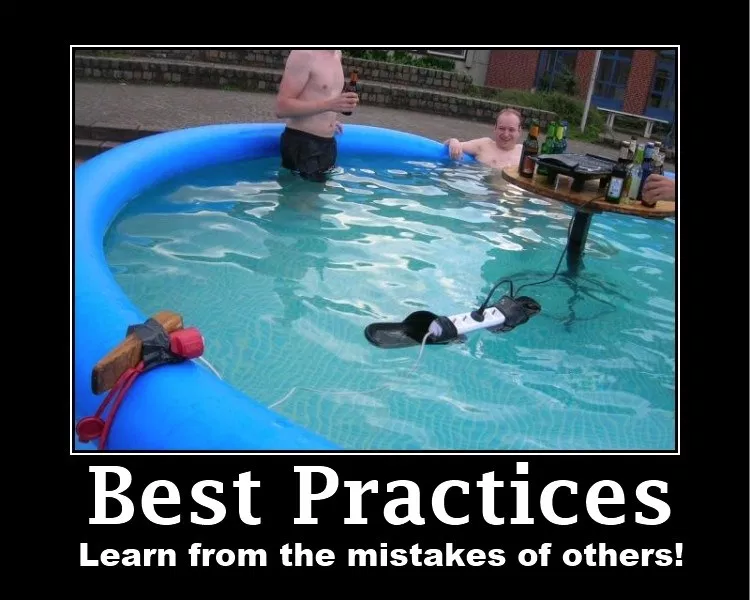

Learn from the mistakes of others

Meetups and colleagues are great resources. Of course experiencing pains yourself does wonders!

Ways to improve code (in general)

Questions to ask yourself while coding

Could I have prevented this bug from happening?

What did I do to cause this difficulty? Take responsibility don't blame others

Learn from refactoring and do it better the first time on the next project

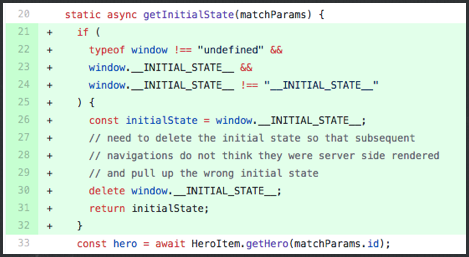

Look out for code smells (some that are common in React)

Duplicated code

Large classes

Too many arguments/attributes

Lines that are too long

Your linter should help grow your intuition on these so they become second nature

Project Setup/Structure Best Practices

Using create-react-app vs. other boilerplates

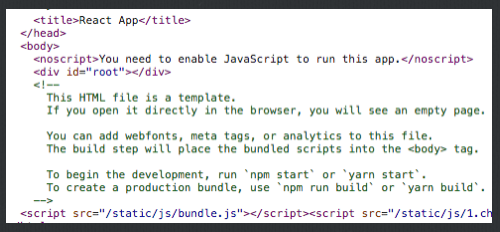

You have got to admit it, create-react-app really helped our community out. You can focus all day on what it does NOT have, but it really gave our community a boilerplate to rally behind. Just as important was designing a system to extend that boilerplate and keep up to date with library updates. Other boilerplates traditionally have difficulties keeping up and rely so much on the maintainer to keep them up to date.

It sets up your app the official way the React maintainers think is the best way to set it up and is currently the most well-documented boilerplate we’ve ever seen.

Makes it easy for us to hand off to clients something that already starts with great docs and developer familiarity.

Confident that it will be well supported in the near future

It’s ejectable: if a project requirement requires some overrides, you can eject to expose the underlying config files and customize away!

Use a Package Manager with a Lock File

It is important to choose a package manager that uses a lock file. A package manager always has a file (package.json for JS projects) that lists all of the packages you need and the versions, but the versions you specify usually are not exact down to the patch number.

A lock file specifies which exact versions of the packages that were built up based on the package file. If you don’t have a lock file, when you spin up that project from scratch next year to make a change, it almost certainly will have some packages that don’t work together and send you down a rabbit hole.

Utilizing a lock file ensures that you can make that critical change on the project without having to figure out a bunch of package dependencies, you get to focus on adding value, not wasting time on package management.

When yarn wasn’t around we used npm along with npm shrinkwrap which created a lock file. However, the act of running shrinkwrap was not automatic, it was an extra step, and easy to skip.

We originally choose to use yarn because it implemented a lock file automatically. This allows us to document the exact version of each package we use in development, guaranteeing reproducibility of the code in production and in other developers’ environments. Of course, this was recently added to npm v5.0, so they now both include this feature.

Yarn is also substantially faster than npm due to its ability to install packages in parallel. Here are some benchmarks.

The intention is to commit the lock file into git

Also, it might seem obvious, but you need to make it clear which package manager you use. You don’t want one dev using yarn, one using npm, because each uses a different lock file and if both get committed into the repo, it can lead to confusion, errors that are hard to catch.

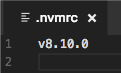

Node Version Manager (nvm)

Javascript projects are notorious for being very vague around nodeJS requirements, usually just saying nothing, or using a >= major number. That is problematic because ultimately a year in the future, the latest version of node might have an incompatibility. I mean really, who even knows?

Upgrades of packages should be for good reason, not just for the heck of it. We like to get specific around what exact version for the same reproducibility reasons as a package lock file.

We choose to use NVM and a .nvmrc file in our codebase for the same reasons to have a lock file: reproducible environment. Here is an example:

It makes it really easy for a developer new to the code base to get up and going.

Code Style

Our philosophy is to enforce code readability and consistency across developers while not burdening the developer by being required to memorize style/formatting rules. We accomplish this by implementing Linter and Editor Config files in all projects.

Linter

We use the linter built into create-react-app in our .eslintrc files.

You don’t have a lot of flexibility to customize the linter rules within create-react-app “yarn start” console warnings unless you eject. We often don’t eject, so we implement our own in a .eslintrc file and use our editor to help us with that.

VS Code you can install the “ESLint” extension.

You can then add more strict rules than what is by default used in the create-react-app defaults, but you use the editor to show them, it won’t show up in the cmd line console for "yarn start".

Editor Configuration File

We use an .editorconfig file to define and enforce several formatting rules within the editor, like spaces and end of line/end of file newline.

In VS Code you need to install the “EditorConfig for VS Code” extension.

We are growing more comfortable with automatic code formatters on save, like "prettier". It is really best to start off a project with it instead of introducing later, when you might get big commit diffs that are just code style changes.

Starting to see what code transforms take place has a great side effect of educating the developer on the changes necessary to meet code style guidelines, like in-editor linter feedback, so that there is some education that can be done.

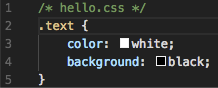

Styles (.css files vs. CSS-in-JS)

We prefer CSS files or CSS-in-JS, CSS modules, or inline styles. But this choice is often one of those that is highly project dependent.

It's typically easier for designers to modify CSS files, as no JS/React knowledge is necessary.

We still split up the styles so we have one CSS/LESS/SASS file per component and include them alongside the component within the folder structure. We'll go through that folder structure later in this post.

While there are some benefits to putting styles/CSS in JS and/or applying them directly to components, we prefer CSS/LESS/SASS files since many of our projects go on to be maintained by our clients, often by designers trying to update things. Often times, it is dictated by the clients that they don’t want designers to have to understand React or JS, but CSS/LESS/SASS files any designer can understand and easily change things by finding the class name with a simple inspect in the browser. This all really depends on the technical capability of our clients.

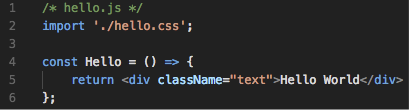

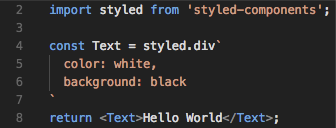

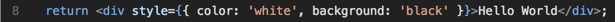

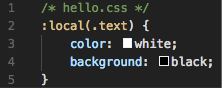

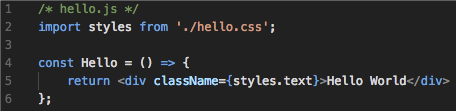

Here are some examples of these different types of styling within React.

CSS files

Here is a sample CSS file

Here is how to use it within JS

Styled Components

Styles inline

CSS Modules

CSS file

JS file

CSS Preprocessors (LESS vs. SASS)

We choose the one that is most popular with the libraries we use

Bootstrap v2/v3 used LESS so we have used LESS

Bootstrap v4 uses SASS so we plan to use SASS more often

Leave the generated CSS files and maps out of repo/codebase

When using “import ‘./mycomponent.css’” in components, avoid CSS naming collisions by using a unique className on component’s parent element

Folder Structure

Folder structure is hard to really say there is the "one" or a "perfect" folder structure. This is one of the design best practices where there are strong opinions. The goal is to have something that makes sense and another developer would come in and identify a pattern right away and find it easy to follow.

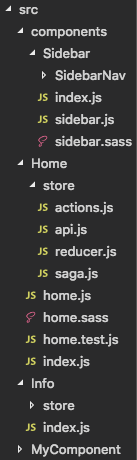

We make it so that all components have their own folder. This contains all related code and styles. If there are any sub-components, they can also be included in sub-folders. This is most commonly referred to as a "fractal" structure, where each component folder would look the same with directory structure and types of files.

Here is a sample folder structure:

We put the React component code in a named ".js" file, not a ".jsx" file, that is not recommended. In the structure above, the example of that would be "src/components/Home/home.js", that contains the component code for the "Home" component. This helps us with stack trace and editor readability over a structure of putting everything in an index.js file within each component folder.

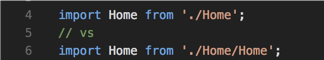

We reserve the index.js file only for doing exports.

With an index.js file like this:

We can then improve how our imports look:

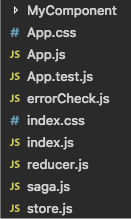

An example of that folder structure collapsed:

Why we chose this structure:

Project Code Best Practices

Use Redux in Most Cases

We use Redux almost exclusively to manage state within our apps.

Having one-way data flow coupled with the React Virtual DOM provides a great pattern for performant web apps.

Redux + Redux Dev Tools === Awesome

There are a few apps we have created that don't use Redux, that's usually a decision based on how much data we are storing and pulling in from an API. However, since most apps rely on data from an API, and the more complex the data is, the more benefits you get from using Redux.

We've covered Redux a lot in previous tech presentations, so we won't cover Redux basics here, but several of our next design best practices are in regards to specifics within Redux. Read up on Redux with their official docs if you need a refresher.

Use Action Creators in Redux

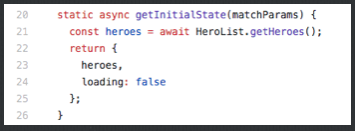

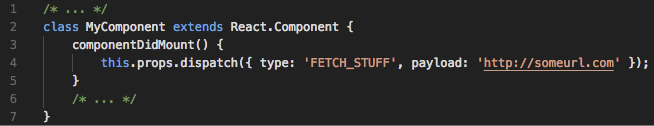

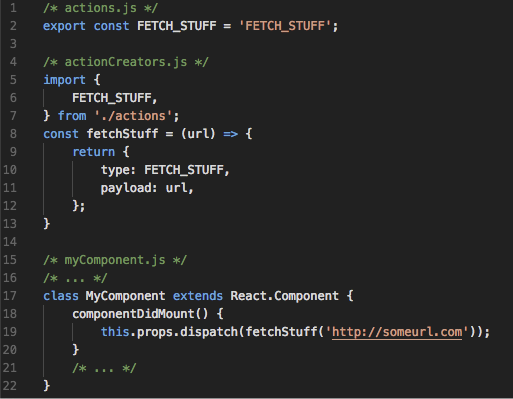

Using actions in redux, you are dispatching an object that has a type which is the action and a payload that goes with the action. Here is a simple example without an action creator:

The payload is very specific to the type of action, yet what you dispatch is this rather informal data structure. This makes it tough to track down what exactly the payload should be for a particular type of action.

If you use the Action Creator pattern, you turn that action into a function that has a name, you can import, and you can make the parameters it takes into formally defined data structures using JSDoc or Typescript. Really makes them easy to use across the code base.

In general, we try to minimize the amount of searching a developer has to do in order to use something. Action Creators help with this.

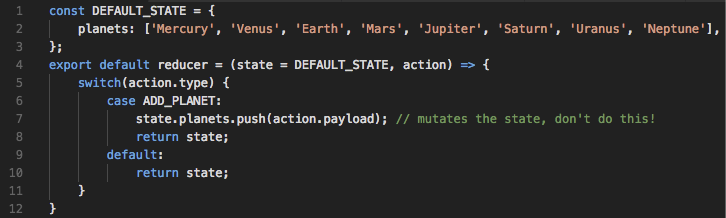

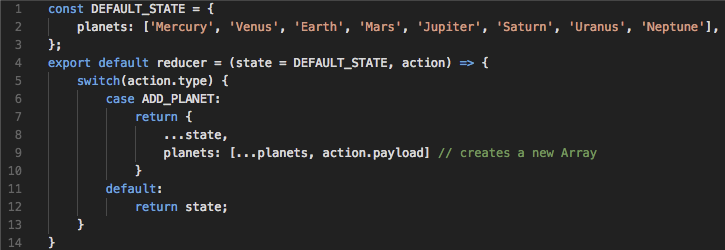

Use immutable data changes within your Redux reducers

Use only immutable data changes within your reducers to unlock the performance of your web app. Being immutable means that you never change data in place, you always create a copy of it. So you are never changing the data itself, you are only creating a new copy of it with new changes.

This means you can use PureComponent vs. Component as mentioned in one of the upcoming design best practices, because within your data store, you are always changing the top level object reference instead of individual properties. This results in a Component being notified of a change in the most performant way possible, with the highest level object reference changes, which is a very fast thing for a Javascript engine to do.

We don’t use ImmutableJS often, but probably should use it more often for the data structures inside the Redux store. We just design our reducers to be immutable by convention, which isn’t great. ImmutableJS would force us to have immutable data structures and prevent data management mistakes.

One very common place where you can easily be mutating data instead of treating it immutable is when you have an array in your redux state.

Changing data by mutating data in place (bad):

Changing data by creating a copy, keeping the data immutable (good):

Will your app work even if you change data in place? Probably. Could it perform better if it treated all data as immutable? Almost always.

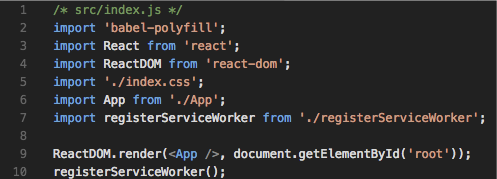

Use Babel-Polyfill

Using ES6 features can cause problems in Firefox and Internet Explorer. Specifically Array.from, other Array methods, and some Map methods

We choose to take the code size hit (50-60kb) and not limit our usage of ES6 features

Babel version can only be changed if we eject create-react-app, we would rather not do that.

$ yarn add babel-polyfill

If you are already ejected from create-react-app, or didn't use create-react-app to start and have access to the babel compile process, I'd just change the babel target.

Error Handling

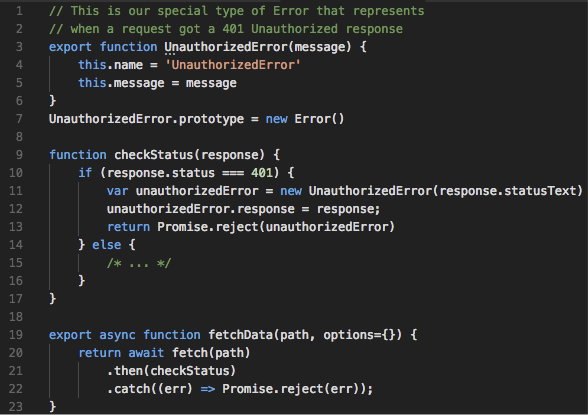

Transform common non-descriptive errors into more useful ones.

We transform common Errors into more descriptive errors (similar to the way Python does Exceptions). For example, when we receive a 401 Unauthorized response from the backend we will transform it into a custom UnauthorizedError and re-throw it. This is an instance of the UnauthorizedError class that we have defined as inheriting from the Error class.

This makes code that is dependent on Error type easier to read and abstracts the logic to a central location.

Send unhandled errors automatically to a bug monitoring service

We use Sentry as our favorite service to send errors in our frontend app.

The point is to find out about errors, face the reality of your app, not ignore them because front-end JS errors aren’t easy to track like back-end errors are. Add in something that can relay errors that your users have to a service that tracks them, and you get notified when they happen, and you build reviewing the errors into your application support model

Sentry is great, we use it and configured properly, can also send your redux state along with it.

raven-js is the official Sentry package that is required to get up and going with Sentry.

raven-for-redux is the redux integration package we prefer. It sends the redux state and recent redux action history to Sentry. It also provides an easy way to include user information with each error (although this should be in the redux state already).

Version Checking

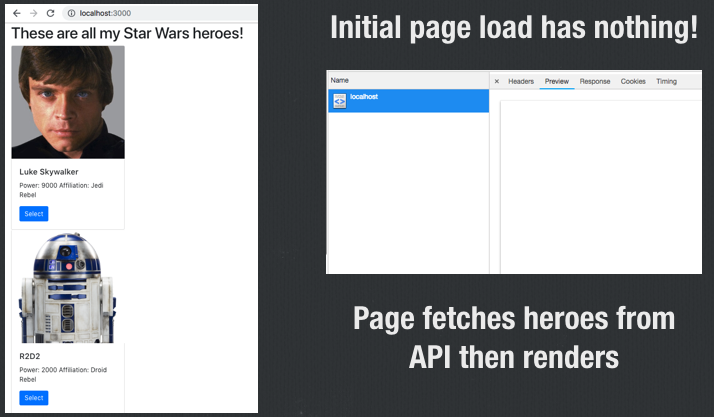

Problem:

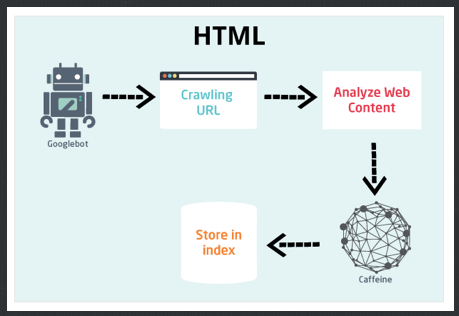

What if your users are still using an old version of your SPA because they haven’t refreshed in a week?

How do they get your newest code?

You want bug fixes in their hands as soon as possible, but there is nothing automatically done for you. You need to take care of this yourself. In a React app, there is nothing that forces the user to reload the browser, which would reload the index.html, which would bring in your new JS and CSS code.

Solution:

Track the running and released version of your React code.

When you detect that they differ, it means you prompt the user to refresh the app or you force a reload that will force index.html to be re-fetched and bring in new code.

We put the released version in the public/manifest.json file, part of every create-react-app project.

Within our app, the first time it loads up, we fetch the manifest file and save that version as our running version.

Throughout our app's lifecycle, we check that manifest file and compare it's version to the running version. You need to be careful that manifest file doesn't return a cached version, so we usually append some garbage to the end of the URL, like a timestamp /manifest.json?t=382323823

Component Choices

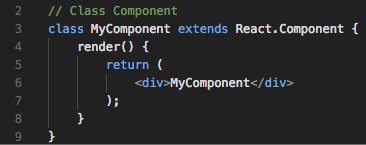

Function vs. Class

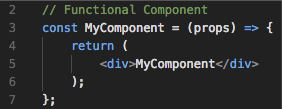

Choose functions when possible, in general always choose the most simple solution to a problem.

Pros - simpler, easier to understand, more memory efficient, easier to test

Cons - Lack lifecycle methods and state

Functional component:

Equivalent Class component:

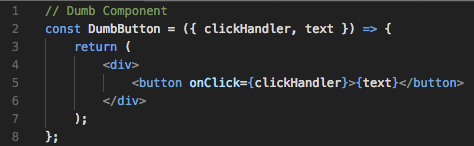

Dumb vs. Smart

Dumb/presentational components present stuff, generally should be pure components. Pure meaning that there are only inputs and outputs, no side effects. The word "pure" is used because it mirrors the same word used in "pure functions", which refers to a function that only has arguments (inputs) and a return value (output) but no other side effects, defined only from it's inputs and outputs.

Notice how this Dumb component, which you click the button, it just passes the event out. It does not store the data, just passes the data up to it's caller:

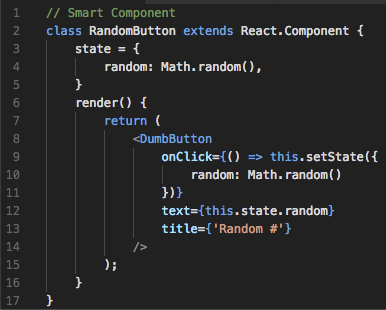

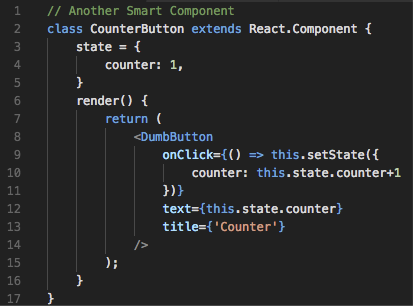

Smart/container components manipulate/provide data to other components

When possible decouple data handling from the markup by putting the data handling in a smart component, put the markup in a dumb components

Allows reusing dumb components with multiple smart components

Here are a couple smart components that use that same dumb component

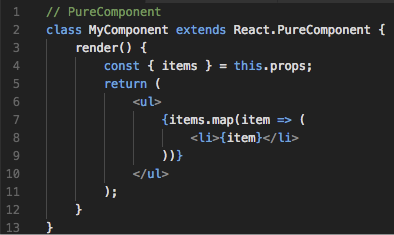

PureComponent vs. Component

Use React.PureComponent when possible

Only re-renders when data has changed at the highest level.

Works great with immutable data

Improves performance, prevents unnecessary re-renders

Easy to add - one line modified

Here is a React.PureComponent and a React.Component in code.

React.PureComponent:

React.Component:

Only line 2 changed

The big change happens in shouldComponentUpdate(), which in a React.Component returns True by default. PureComponent overrides this with a shallow compare so it will only detect when the top level object reference is different, which is what happens when we use immutable data in our store.

Side Effects - Thunks vs. Sagas vs. Epics

Side effects are most commonly async API calls that, when finished, will update

Thunks, while simple, do not give the flexibility that we need in most large applications.

Sagas give greater flexibility, like only taking actions when you want to, while not deviating from the familiar redux logic flow.

Epics introduce streams which are, in our opinion, not as straight forward and don’t have any significant benefits over Sagas, so in most cases we just use Sagas.

The main point is to have a flexible, easy to use way of managing side effects within your React + Redux application, and we like Sagas or Epics over Thunks.

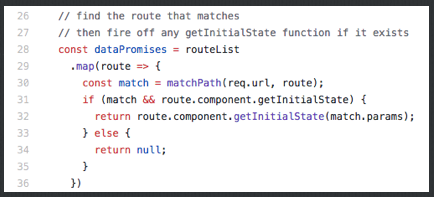

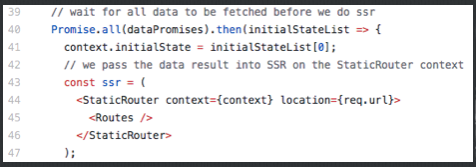

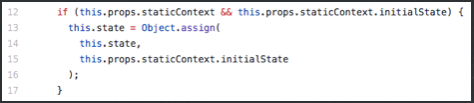

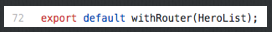

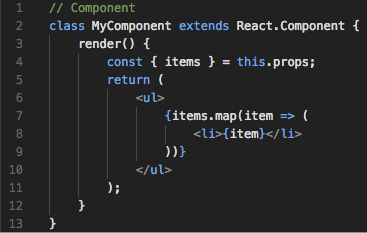

Routers

History

React Router was the first goto routing solution. With the introduction of Redux, having separate application and routing state, react-router-redux was written to live alongside react-router. This introduced the concept of multiple sources of props, all of a sudden you had your props and ownProps when you had query parameters. So instead of having redux manage all state, you had state split between redux and within the URL. This always seemed a little clunky to work with.

Redux Little Router was a project we really liked how they understood the problem and went about solving it in a way we expected. Redux Little Router basically took the React Router philosophy but moved its routing state into Redux’ application state.

Then, another evolution of the same concept, Redux-First Router takes it another step by removing components whose sole purpose is routing (<Route /> and <Fragment />). Removing the more imperative elements of React Router and Redux Little Router.

React Router

We have used this in past projects (even with Redux) and it is the obvious choice for applications not using Redux because it has great support and lots of users.

Redux Little Router

We feel this is a good alternative to React Router if Redux-First Router didn’t exist but seems to not want to stray too far from the familiar React Router territory. It did a lot for inspiring the future of routers.

Redux-First Router

This is our go-to on all projects where we are using Redux. It fits seamlessly into our Redux store and offers many possibilities for triggering side effects based on specific route changes.

Instead of a single action type for any route change, every route change has a different action.

In the case of error reporting, because of a different action per route, we also have a consistent history of a user’s interactions for use in trying to reproduce user errors.

We also then turn routing into using an action creator, so we can do stuff like goHome(), or goVideoDetail(video_id)

Testing

No opinion on which libraries to use, we’ve used a lot over the years

Create-react-app comes with Jest, so we tend to use that in React projects

Most of our design opinions regarding tests come down to what amount of testing is right for a particular project. Being ideal, 100% is always the target, but that’s almost never practical or realistic.

There are diminishing returns with tests, prioritize which tests to complete first

Priorities

Test complex code that is prone to bugs or missed corner cases with future modifications

Test commonly accessed code, like on most interactions.

Write tests that give a lot of code coverage with little test code or work.

If you encounter a bug that could have been prevented with a test, that’s a good excuse to write one.

Documentation in Code

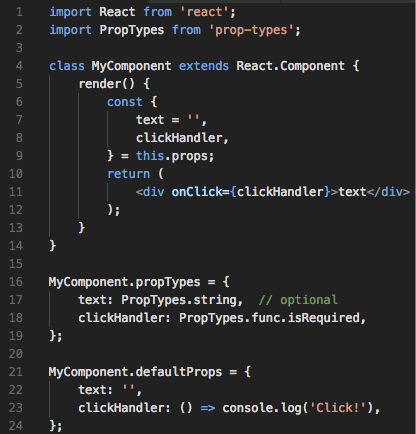

React PropTypes

Try to always use them

Helps developers prevent logic errors that may otherwise be difficult to trace.

Provides a way to document what parameters a function/class accepts using standardized code that’s easy to understand.

React defaultProps

A more explicit way of setting defaults in a standard way.

When using PropTypes, defaultProps provide a way to set defaults for parameters. The conventional method of setting the defaults in the function definition will not pass the default values through PropTypes. defaultProps sets the defaults prior to passing the parameters through PropTypes validation.

Typescript

Makes sense on projects that reach a bigger scale with code size, number of devs, complexity, or life span, but in our opinion overkill on most smaller projects.

This is another feature that we look at the technical capability of the client team we would be handing it to and factor that in.

On bigger projects, it’s really amazing for creating self-documenting code and allows developers to easily use new code they haven’t seen before.

Searching for missing @types from DefinitelyTyped files can still be a pain because it’s not guaranteed that there will be types for a particular library.

Our friend “any” has come to the rescue many times.

JSDoc

Projects that don’t use Typescript, it’s important to comment properly. VS Code picks up JSDoc pretty well, so we use that to do typedefs and still have some pretty good autocomplete within the editor.

This is the most common standard for documenting JS code, so we use it as much as possible when writing comments.

Wrap-up

If you read this whole article, you are clearly a great human being. Connect with me, Jeremy Zerr, on LinkedIn and let me and my team help you build great software!